Cody Potter - Posted on March 24, 2024

Why Your Angular or React App's SEO sucks

Today we're going to get into SEO and one massive limitation that traditional single page applications (SPAs) with Client-Side Rendering (CSR) have which means they will never be optimized properly for search engines and social sharing.

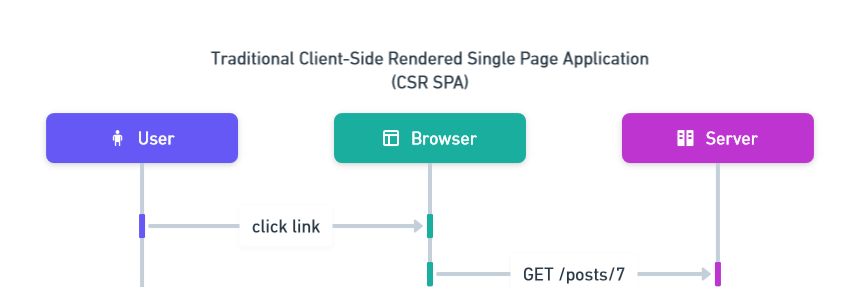

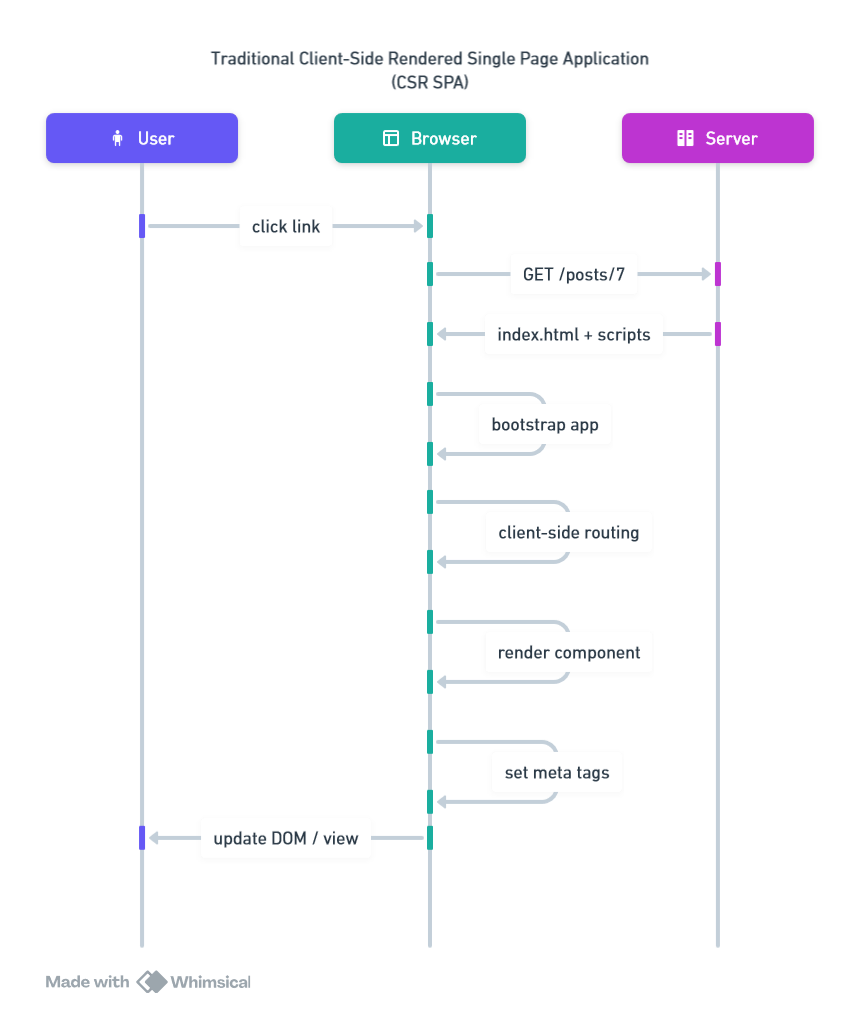

How Traditional CSR SPAs work

First, a high level overview:

Lets look at some examples in React and Angular.

Lets look at some examples in React and Angular.

The Initial GET Request

A person's browser makes a GET request to your website at your-website.com/posts/7 . In traditional single page applications your website has ONE page, and ONE index.html. Your single page application's web server has a catch all for any path passed to it, and it serves up the index.html and your angular/react bundle.

The response looks like this for Angular:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>Angular App</title>

</head>

<body>

<app-root></app-root>

<!-- Angular App -->

<script src="path/to/your/angular-app/runtime.js" defer></script>

<script src="path/to/your/angular-app/polyfills.js" defer></script>

<script src="path/to/your/angular-app/styles.js" defer></script>

<script src="path/to/your/angular-app/vendor.js" defer></script>

<script src="path/to/your/angular-app/main.js" defer></script>

</body>

</html>

Or like this for React:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8">

<meta name="viewport" content="width=device-width, initial-scale=1.0">

<title>React App</title>

</head>

<body>

<div id="root"></div>

<!-- React App -->

<script src="https://unpkg.com/react@17/umd/react.development.js"></script>

<script src="https://unpkg.com/react-dom@17/umd/react-dom.development.js"></script>

<!-- Your React App JS -->

<script src="path/to/your/react-app.js"></script>

</body>

</html>

Application Bootstrapping

Once your JavaScript and index.html file is delivered to the client's browser, the browser executes the JavaScript and your single page application is bootstrapped.

In Angular it looks like this:

import { platformBrowserDynamic } from '@angular/platform-browser-dynamic';

import { AppModule } from './app/app.module';

platformBrowserDynamic().bootstrapModule(AppModule)

.catch(err => console.error(err));

In React:

import React from 'react';

import ReactDOM from 'react-dom';

import App from './App';

ReactDOM.render(

<React.StrictMode>

<App />

</React.StrictMode>,

document.getElementById('root')

);

Application Routing

Once the application is bootstrapped, the Angular or Reat router can begin doing its thing. It reads the browser's intended url using the window.location API and uses JavaScript to load the correct view/component. In our example, this was /posts/7.

In Angular:

// Angular Router example

import { NgModule } from '@angular/core';

import { RouterModule, Routes } from '@angular/router';

import { PostDetailsComponent } from './post-details.component'; // Assuming PostDetailsComponent is your component for displaying post details

const routes: Routes = [

{ path: 'posts/:postId', component: PostDetailsComponent }

];

@NgModule({

imports: [RouterModule.forRoot(routes)],

exports: [RouterModule]

})

export class AppRoutingModule { }

In React:

// React Router example

import React from 'react';

import { BrowserRouter as Router, Route, Switch } from 'react-router-dom';

import PostDetails from './PostDetails'; // Assuming PostDetails is your component for displaying post details

function App() {

return (

<Router>

<Switch>

<Route path="/posts/:postId">

<PostDetails />

</Route>

</Switch>

</Router>

);

}

export default App;

Rendering a Component

Ok, now your app knows which component to render, it loads the component and begins setting all the critical information the webpage needs in the DOM. This includes setting the page title, description, and any important meta tags. Of course this process works a little differently between these two frameworks.

In Angular:

// Angular example

import { Component, OnInit } from '@angular/core';

import { ActivatedRoute } from '@angular/router';

import { Title, Meta } from '@angular/platform-browser';

@Component({

selector: 'app-post-details',

templateUrl: './post-details.component.html',

styleUrls: ['./post-details.component.css']

})

export class PostDetailsComponent implements OnInit {

constructor(

private route: ActivatedRoute,

private titleService: Title,

private metaService: Meta

) {}

ngOnInit(): void {

this.route.paramMap.subscribe(params => {

const postId = params.get('postId');

if (postId) {

this.titleService.setTitle(`Post Details - Angular App`);

// Set other meta tags or page information here if needed

}

});

}

}

In React:

// React example

import React, { useEffect } from 'react';

import { useLocation } from 'react-router-dom';

function PostDetails() {

const location = useLocation();

useEffect(() => {

document.title = 'Post Details - React App';

// Set other meta tags or page information here if needed

}, [location.pathname]); // Update when location changes

return (

<div>

{/* Post details content */}

</div>

);

}

export default PostDetails;

The Problem

Ok, ok none of this is surprising. I've seen all of this boilerplate before.

I hear you, but keen observers can already see the problem.

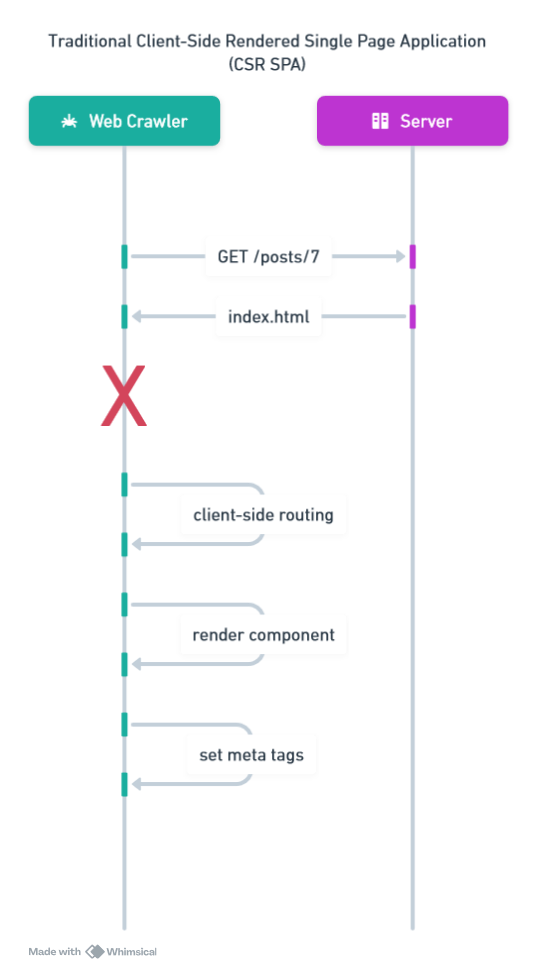

When Google/Bing/DuckDuckGo traverse the internet looking to index content, they use web crawlers. These web crawlers find anchor tags and make GET requests for any link they find -- most search engines also have some kind of console where you can submit your web pages, but we'll get into this later.

When a web crawler or bot makes a GET request for any link they find, they don't execute JavaScript scripts that are returned -- this is the crux of the issue for CSR SPAs like traditional Angular and React apps -- do you see the problem yet?

We went over all of these steps, but web crawlers never get past step 1. The web crawler makes a GET request to a web page, but the actual content of that web page is empty and cannot be crawled. It contains a single

We went over all of these steps, but web crawlers never get past step 1. The web crawler makes a GET request to a web page, but the actual content of that web page is empty and cannot be crawled. It contains a single app-root for Angular or a div for React.

Furthermore, any meta tags that are specific to that page are not yet set.

OpenGraph is dependent on these meta tags being set in that initial GET request. When the meta tags aren't set until after JavaScript execution is done, the best a developer working on a CSR can do is set the head meta tags to some sensible value that sort of makes sense site-wide. You will never get dynamic open graph previews for links shared to your website using a traditional client-side rendered single page application.

OpenGraph

For those unfamiliar with OpenGraph, just think of it like a set of standards for meta tags. If you add specific meta tags to your web page, applications that honor the OpenGraph standard know how to render a preview of your web page. Have you ever wondered how facebook, linkedin, discord, and slack show previews of links? It's powered by OpenGraph meta tags. If these meta tags do not exist in the INITIAL http response to the GET request made to your web page, applications have no clue how to preview your webpage.

For those unfamiliar with OpenGraph, just think of it like a set of standards for meta tags. If you add specific meta tags to your web page, applications that honor the OpenGraph standard know how to render a preview of your web page. Have you ever wondered how facebook, linkedin, discord, and slack show previews of links? It's powered by OpenGraph meta tags. If these meta tags do not exist in the INITIAL http response to the GET request made to your web page, applications have no clue how to preview your webpage.

The Solution

The solution to this problem is to have those meta tags ready in the initial response to the GET request. In order to accomplish this, you'll need to do something called "Server-Side Rendering". Instead of your web server serving a singular index.html to every GET request, it first builds the webpage and sends that instead. This gives your application the chance to run some server-side code to have all the meta tags set properly for the initial response.

Next, we'll go over converting a traditional Angular CSR application to SSR and deploying it with basically no extra code on your part.